AI-native moats and the end of recurring revenue

/Tiffine Wang is a global venture capitalist focused on AI and tech, along with board advisory.

Lately I’ve been spending a lot of time evaluating later-stage AI-native startups, and their rise is changing more than just what products look like.

They are rewriting how revenue is earned, how customers are served, and how moats are built. Traditional SaaS promised stability through predictable recurring revenue, but that playbook is changing. Tokens, usage-based pricing and continuous intelligence are colliding with the old subscription model, opening the door to an entirely new economics of software.

From revenue predictability to dynamic value

Prior to the AI-native era, SaaS businesses were king because of their revenue predictability. Investors loved the steady stream of monthly or annual contracts.

AI-native models break that logic by introducing volatility. Customers pay for tokens, compute, or outcomes instead of static licenses, so revenue can surge with adoption but shrink just as quickly when workloads are optimized. This forces founders and investors to rethink what quality of revenue means.

AI delivery costs vary, so pricing tends to shift too. Instead of seats or modules, AI-native models may price in real time: per token, per conversation, or per measured outcome Customers still want predictability, which is why the most successful models will balance flexibility with stability. Our relationship with software pricing is evolving, moving from fixed fees to models based on consumption and outcomes.

Features to intelligence as the product

Legacy companies bolt AI onto existing products, but AI-native companies build their business around intelligence itself. Each customer interaction generates data, and each dataset compounds learning. Each use makes the product smarter, making its value inseparable from its pace of learning. In this world, features are short-lived and quickly commoditized, while intelligence drives enduring value.

SaaS businesses scaled by adding people, processes, and infrastructure. AI-native companies take a different approach: algorithms manage routine tasks, while humans focus on oversight and strategy. Each cycle of data strengthens the system, attracts more users and creates more data in return. Growth comes from the velocity of this flywheel rather than headcount.

The compute cost reality

Going back to my infrastructure roots, behind every AI-native business model lies a difficult economic truth: compute is expensive. Training large models can cost tens or even hundreds of millions of dollars. Running them at scale is often more costly than building them. Every token generated burns GPU cycles, consumes energy and requires specialized infrastructure.

For infrastructure companies, this creates constant tension. On one side, usage-based and tokenized pricing drives powerful growth flywheels. On the other, margins are squeezed by the cost of inference. Unlike SaaS, where incremental usage is nearly free, AI-native workloads carry variable costs that rise in lockstep with revenue.

This is why infrastructure players from cloud providers like AWS, Google, and Azure to specialized hardware firms like Nvidia, Cerebras, and Tenstorrent capture a disproportionate share of value. Every model, application and token ultimately flows through their silicon and data centers. For founders, this means designing business models with an eye not only on growth but also on compute efficiency.

In my conversations with AI-native startups and customers, one theme keeps coming up: customers want the benefits of flexible, usage-based pricing, but they also need predictability to plan budgets and manage risk. This tension is pushing companies to experiment with hybrid models that blend stability with adaptability. They preserve the recurring revenue investors still value while tying upside more closely to usage and outcomes.

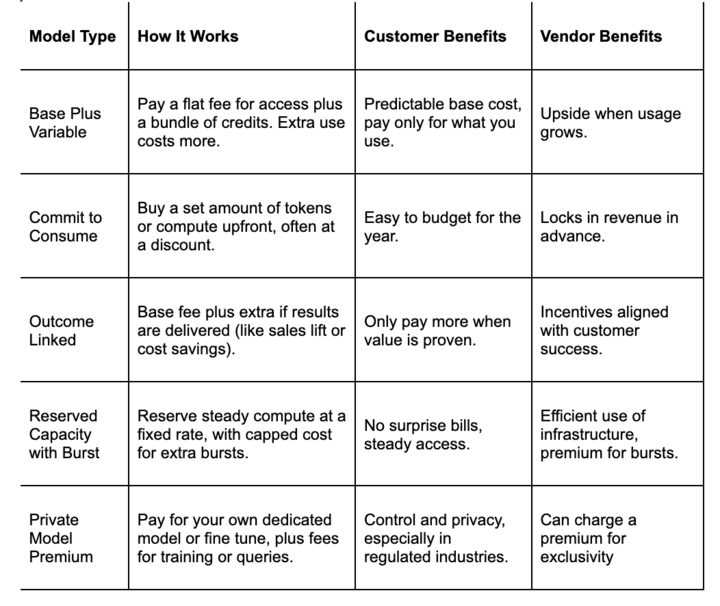

Here are a few hybrid approaches I am seeing work in practice today:

Addressing corporate ROI on AI

There have been many reports finding that AI has not yet delivered ROI for corporations. What I’ve found is that the problem lies less with the models themselves and more with the enterprise environment. Legacy systems, strict security requirements and fragmented datasets create friction at every stage. Compliance reviews, procurement cycles, and integration with outdated workflows often trap projects in endless pilots. By contrast, individuals, prosumers and AI-native companies can adopt AI tools instantly, capture value in daily work, and adjust habits without institutional drag. This helps explain why adoption appears faster and more impactful at the individual or AI-native level, while incumbent corporate returns lag.

It’s still early and AI-native business models will continue to evolve. Compute and token costs, which shape margins and pricing today, may decline over time and begin to resemble utilities. Customers will keep pushing for predictability even as pricing grows more dynamic, which means hybrid models will remain central to adoption. Recurring revenue is not going away, but it is being redefined around usage, outcomes and learning velocity. The more radical shift is still ahead: as clusters of agents begin to collaborate, and as more of the software stack is generated by AI itself, we may find that the very definition of a company changes. The businesses that emerge will likely orchestrate living systems of intelligence that compound value in ways SaaS could never imagine.